In the world of deep learning, linearity and non-linearity are fundamental concepts that allow neural networks to learn and perform complex tasks. Understanding how they work is key to building effective deep learning models.

What is Linearity?

In math and the context of neural networks, a function is said to be linear if it satisfies two main properties:

Additivity - f(x + y) = f(x) + f(y)

Homogeneity - f(αx) = αf(x), where α is a scalar

In simpler terms, a linear function produces an output that is directly proportional to its input. For example, doubling the input doubles the output. Linear functions follow a straight line when graphed.

In a neural network, linear transformations occur in the linear layers, where a weight matrix and bias vector are applied to the input to produce an output. No matter how these weights and biases change, the output remains proportional to the input, keeping the function linear.

The Limitations of Linearity

While linear functions are simple and intuitive, they have some key limitations:

Lack of expressive power - Stacking linear layers just produces more linear layers. The network can't learn complex relationships that require non-linear transformations.

Inability to capture complex patterns - Many real-world data relationships are non-linear. Linear models fail to properly fit these intricate patterns.

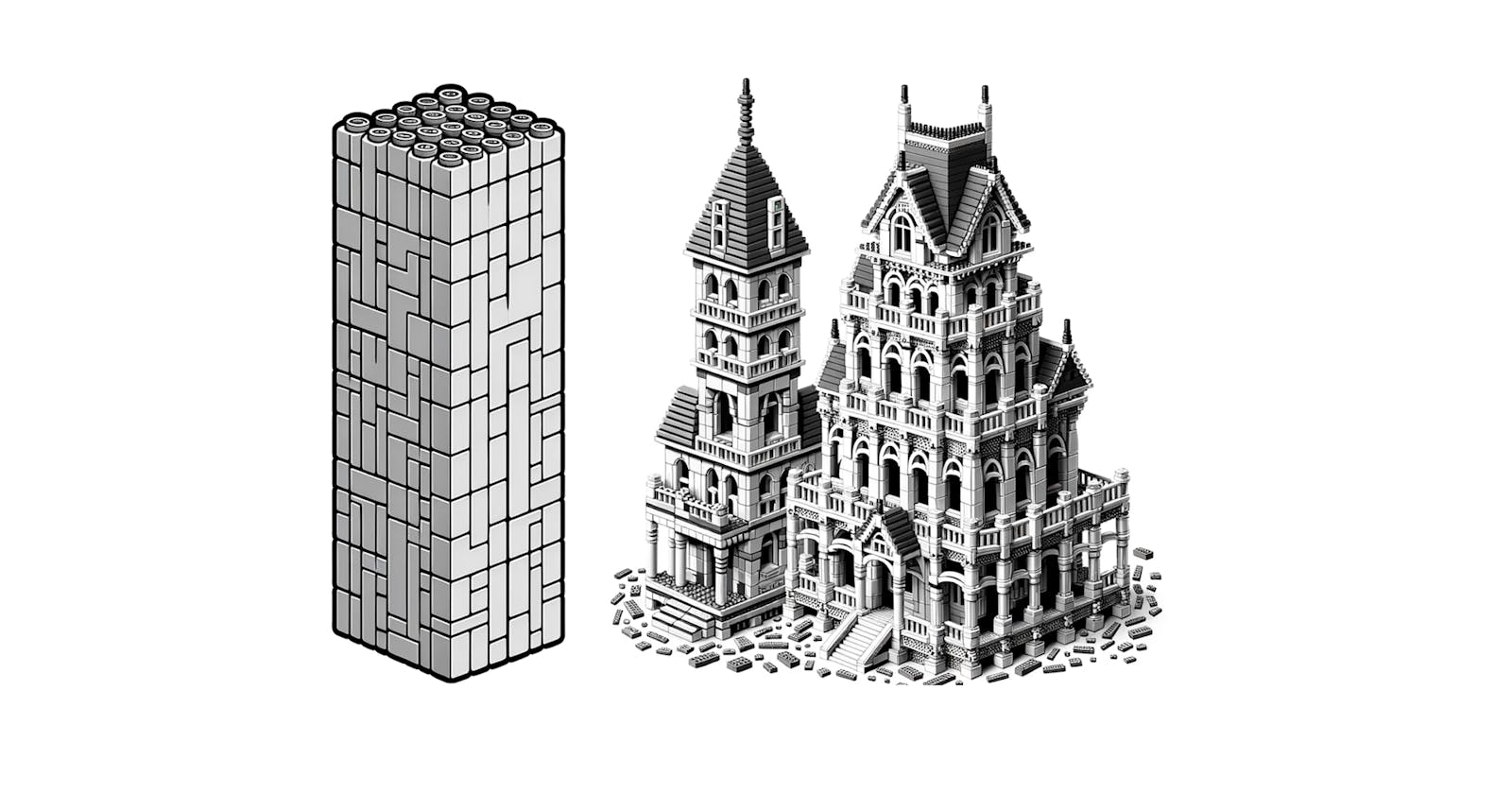

Think of building a LEGO tower using only rectangular blocks. You can stack them to make a tall tower, but can't build complex shapes. This is analogous to linear functions in neural networks.

Introducing Non-Linearity

To overcome these limitations, we introduce non-linear activations after each linear layer. Some common activation functions are:

ReLU - Rectified Linear Unit, f(x) = max(0, x)

Sigmoid - f(x) = 1 / (1 + e^(-x))

Tanh - f(x) = (e^x - e^(-x)) / (e^x + e^(-x))

These activate the output of a linear layer in a non-linear way before it flows to the next layer.

It's like adding angled or curved LEGO pieces to build more complex structures. The non-linear activations allow the network to twist and bend its computations to capture intricate patterns.

Why Non-Linearity is Crucial

Here are some key reasons non-linear activations are crucial:

Increased expressive power - They allow networks to model complex functions beyond just linear operations.

Ability to fit non-linear data - Real-world data is often non-linear, requiring non-linear modeling.

Make networks universal approximators - Stacked non-linear layers allow networks to approximate any function.

Enable complex feature learning - They allow networks to learn and model intricate relationships in data.

Without non-linearities, deep neural networks would be severely limited. The combination of linear and non-linear transformations is what gives deep learning models their representative power and flexibility.

Conclusion

In summary, linear functions provide simple, proportional outputs, while non-linear activations add complexity and intricacy. Together, they enable deep neural networks to extract meaningful patterns, model complex data relationships, and perform a wide range of intelligent tasks. Understanding linearity vs. non-linearity is key to designing and leveraging effective deep learning models.